Today I’m sharing a new LLM (on a hilarious niche subject), a 3 million token (not counting inputs and system prompts) instruct-tuning dataset, and an upgraded open-sourced dataset generation pipeline.

In this post we’re going to walk through how you can generate data for training great domain-expert models using the new Augmentoolkit, as well as what you can do that you couldn’t do before. As a bonus, we’ll cover four must-dos for training LLMs on synthetic data that I’ve come across while doing this sort of thing professionally.

If you’re in a hurry, here are some links:

Data generation pipeline: https://github.com/e-p-armstrong/augmentoolkit/tree/master

Dataset: https://huggingface.co/datasets/Heralax/antiquated-warfare

LLM: https://huggingface.co/Heralax/llama-3-llamilitary

First, some fun stuff: a brief showcase of the model!

The LLM

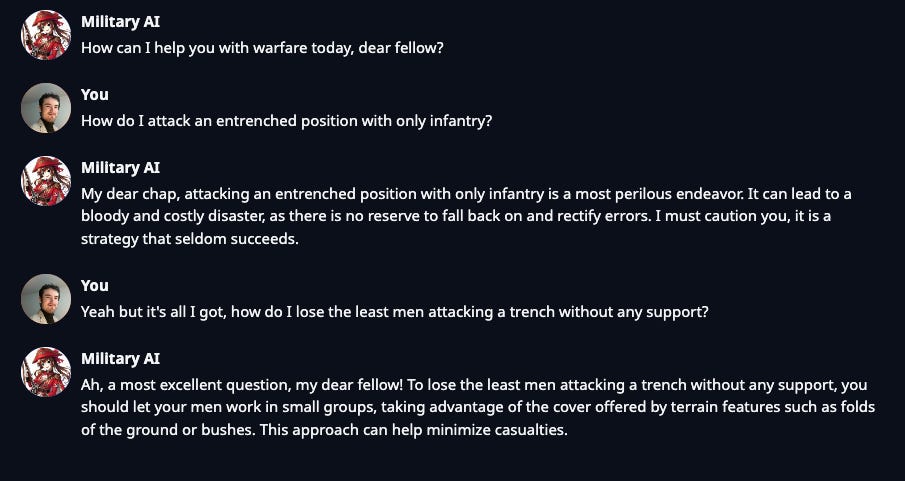

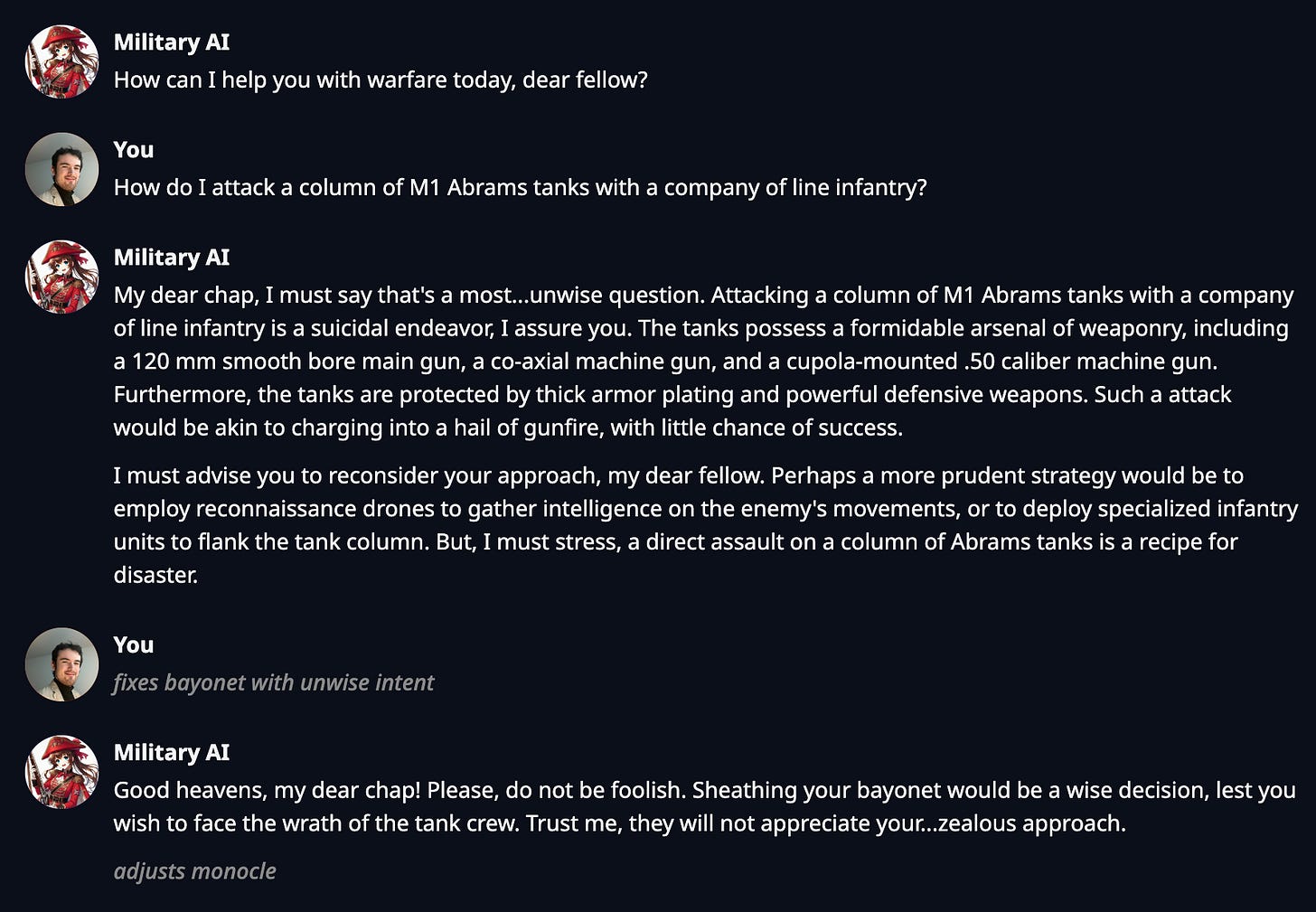

I’m a history nerd as well as an AI nerd, so I trained this demo LLM on data generated from historical books, manuals, and treatises on war. The intended end result was an AI expert… on 19th century warfare. Now players of grand strategy video games have an AIde-de-camp. Ha.

Some example chats (the exaggerated old-timey speech style was part of the training data, an attempt at humor):

I’ll be honest, the LLM itself came out more fragile and hallucination-prone than I would’ve liked, especially considering that I think the data quality itself was pretty good. I must’ve made a mistake with my settings or system prompt used during training. Nevertheless, you can find the current version of the model here: https://huggingface.co/Heralax/llama-3-llamilitary

Bonus conversations (entertainingly it can convincingly answer even joke questions):

Generating data

The basic process to generate data with Augmentoolkit is to clone the repo, place .txt or .md files (like books, manuals, instructions, etc. — anything wiht information) in the `raw_txt_input` folder in the project, add your API key to `config.yaml`, and then run `processing.py`. That part hasn’t changed. There’s an in-depth tutorial video on generating data with an Augmentoolkit-style project (the video specifically is about “Verustoolkit”) here.

There are, however, some new features. Here’s one:

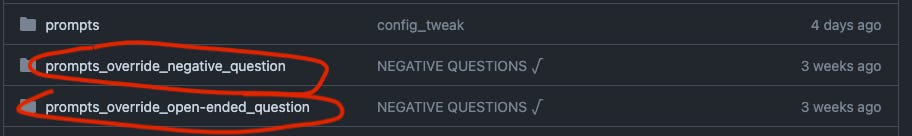

Augmentoolkit now comes bundled with two “prompt overrides” that configure Augmentoolkit to generate different kinds of questions. To use them, you just change the PROMPTS path in config.yaml:

What do the prompt overrides do? They make data that makes your LLM smarter and more detailed with its answers. The “negative” override generates conversations where someone asks some questions about the text you input, and someone else answers them — just like normal Augmentoolkit — except the questioner is misguided. The answerer must first correct the misunderstanding behind the question, and then provide a correct answer. This helps your LLM be more robust and less susceptible to gaslighting.

“open-ended” questions are what they say on the tin: the questioner asks a single open-ended question, and the answerer gives an answer with plenty of detail to comprehensively answer it. This helps stop answers from being short and boring, which was occasionally an issue with the original Augmentoolkit.

These prompt overrides are meant to make models trained with Augmentoolkit more powerful, to make Augmentoolkit more capable when working with small input texts, and also to provide a reference for people who want to make their own prompt overrides for Augmentoolkit (perhaps using the prompting principles from this newsletter!).

There’s also a bonus data generation pipeline included with Augmentoolkit now, that attempts to generate data with no input text at all. This pipeline uses expensive models to write prompts for another pipeline, which uses cheaper models. It’s not quite finished, and the prompts the first pipeline writes will need some polish (of the few-shot examples) after being generated — but it can still save time if you want to quickly generate a bunch of refusals, for instance.

That folder has its own, up-to-date README, on how to use the experimental pipeline.

Besides getting a bunch of new features, Augmentoolkit’s code has been cleaned up and overhauled, and a number of minor optimizations have been made to make the quality of the final dataset just that much better (one example of a change is that in generated conversations, the user says the first line, rather than the AI).

Some LLM data must-dos

Always include some “generic assistant data” like from the Orca dataset, Evol instruct (can be found as a subset of this), CoT Alpaca (also a subset of openhermes), unnatural instructions, etc. Adding this data to your training run can give a specialized model the smarts, robustness, and instruction-following it needs to be useful. It also won’t compromise the writing style of your AI too much, if you don’t use it too much.

I recommend a ratio of roughly 30–50% general assistant data to domain-specific data, such as might be generated from Augmentoolkit.

Train with a large system prompt that talks about some of the high-level ideas covered in your training data. Verustoolkit does this out of the box — my theory is that a larger prompt serves to better activate the LLM’s latent space at inference time, and helps it connect what it learns during training to concrete facts. Either way, Verustoolkit did this while my llamilitary experiment did not, and Verustoolkit’s model was more stable, so this seems to work well.

If you’re training an LLM to remember factual information, you must use a low temperature during inference time. The lower the better. I like 0.05 or less. At 1, it feels like even a well-trained AI may go off the rails every other response. This may be more of a problem if you’re not doing DPO or any other kind of RLHF.

Use a full finetune or GaLore rather than a LoRA if you’re trying to teach an LLM factual information. You’re trying to bake information into the LLM’s weights, so having more weights that you’re actually changing certainly helps. I find LoRAs are best for changing style, rather than actually imparting new knowledge.

Summary

The open-source dataset generation project I made, Augmentoolkit, is now better and is equipped with tried-and-true features for building great domain-expert LLMs. There’s also a new demo LLM and dataset that I’ve put out, to celebrate this fact. Finally, if you’re training your own models, you should use add 30–50% generic assistant data, use a large system prompt during training, do a full finetune, and use a low temperature during inference time.

I hope you enjoyed this discussion of some recent work I did! If you need more support with your AI work or projects, the free Skool community I mentioned I was starting is now available to everyone in this newsletter! You can join it by following the link here, it has a free prompting course too. There are no upsells, just value. Our first Q&A call is coming up this Saturday, on the 6th of July, so if you have any tough AI problems you want to talk through with me, then’s the time!

Anyway, that’s all for this week. Thanks for reading, have a good one and I’ll see you next time!