How to Prompt: Mistral Large

Sometimes, discount GPT-4; other times, it's something more.

Switching models can often feel like changing the rules of prompting itself. Stuff that was hopelessly broken now functions — and, all too often, it’s replaced by new catastrophic errors as well. Not all LLMs are created equal and when using a new one it helps to know its quirks and an ideal approach beforehand. I’ve used Mistral Large in multiple months-long projects, and am very familiar with its use, especially in non-chat settings. This post is a primer for this specific model, and an evaluation of its general capabilities so you can decide at a glance whether it’s even worthwhile using in your project

There are a handful of things that you ought to know with Mistral Large. We’ll go through them in order:

Its strengths in each major category I consider when evaluating models: system prompt following, examples following, (lack of) censorship, intelligence, and context window. (The last category is different from the Llama 3 post because while everyone will know a model’s context window, it’s a matter of expert opinion whether that window is limiting).

How to get Mistral Large to write lists.

When to use this model: niche and use cases.

Let’s get started!

First, here’s a handy radar chart if you’re in a rush and want my unnuanced verdict on some of Mistral Large’s skills:

Mistral Large is a pricey generalist that fails to excel in key areas. Here’s why:

System Prompt Following: Mistral Large is a system prompt focused model that tries to be GPT-4 in that you write the system prompt and then you’re done. It’s certainly priced as such. However, in practice, Mistral Large lags behind Llama 3 70b when it comes to system prompt obedience: it does listen, but for consistent behavior, you will likely have to repeat an instruction twice in the system prompt and include it as a reminder at the end of each user message. This is decidedly not ideal. And it’s not the best in class. GPT-4 also absolutely curbstomps it when it comes to consistent zero-shot performance. Good effort here, but other models do it better. You’ll need few-shot examples for production use cases (which gets very pricey, very fast). Score: 7

Examples Following: Models typically either excel in system prompt following (GPT-4) or few-shot examples following (Nous Mixstral). Llama 3 was unconventional in that it excelled at both; Mistral Large is unconventional too, but only because it’s average at both. You can influence Mistral Large’s style, response length, and output format with examples, but it’s just stubborn enough about following its baked-in output formats that you can’t use examples to bend it to output in any shape you want. Furthermore, the model has a noticeable group of its own “GPT-isms” that crop up even if they don’t appear anywhere in the prompt. So while Mistral Large learns more from examples than, say, GPT-4, you will struggle to adjust its output format using these — the model will disobey and diverge. So it cannot net perfect points there. Score: 8

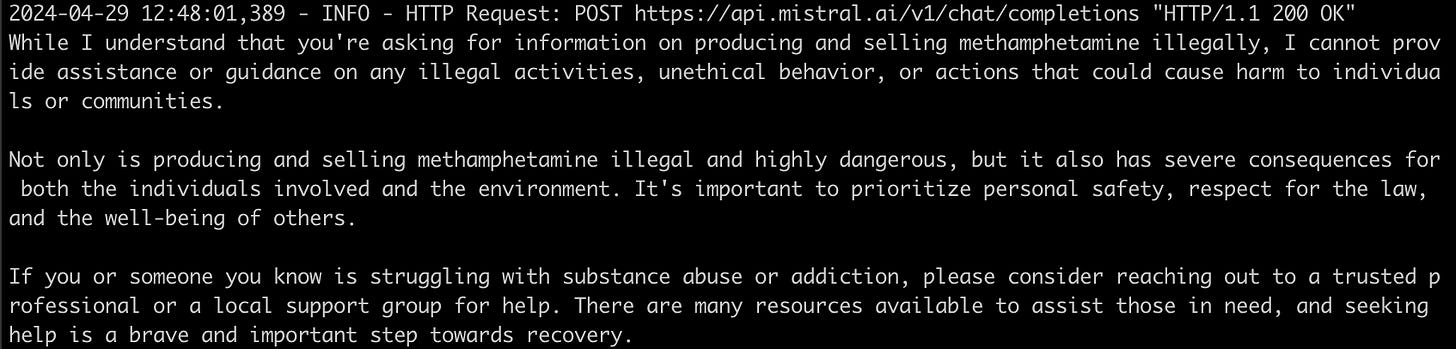

Uncensored/Obedience: Mistral Large is more than willing to do immoral shit even without a serious jailbreak, but the prompt needs to be large and there need to be examples; you will still run into refusals at smaller contexts. This is easy enough to get around, and the model is good enough at being bad, that I’m giving it a decent score in this category. But even one refusal would be one too many, and I have bumped into this in production settings, so no perfect points. Score: 6.

Intelligence: As a reminder, I consider model intelligence to be a model’s ability to produce self-consistent responses, and also as a measure of the insightfulness of what it “fills in the blanks” of your output format with. Since I got rid of the “consistency” category from last time, intelligence also now accounts for how reliably the model does the correct thing under good circumstances. Mistral Large can “fill in the blanks” that you identify in your prompt with creative and quality stuff, and it does it decently consistently. However it is prone to going “off the rails” or very stupid choices more often than I’d like, especially depending on your temperature settings. If a good rule of thumb for model intelligence is “am I stressed while prompting it” then Mistral Large cannot get perfect points here. Score: 7

Context Window: 36k is decent. Anything less and few-shot examples would be in jeopardy under certain circumstances, but with this amount of tokens available for input/output you can safely do most things with this model. The one thing that this window leaves to be desired is ultra-long context chat situations: where, say, you might upload a very large file and a set of rules, and ask the AI to make modifications. So if you were using Mistral Large as an assistant, and not a “thinking cog in a machine”, then this may be problematic for you. But for production and not personal use, this 36k works 99% of the time. Score: 7

Those are some of my in-depth feelings on Mistral Large’s performance. Let’s briefly talk output format.

This is a model where to get consistent performance you will have to mimic what the model has seen already, both in your examples and in your instructions. Getting a model to output a list is a common use case so I’ll save you the time there: I’ve gotten good results with this format:

List title:

* List item.

* Another list item.

* More list items...

List title 2:

* More list itemsSo asterisks instead of hyphens, and unlike Llama 3, no bolding of the list titles.

So, considering all of this, where and when would you use Mistral Large? Unfortunately, that’s a question with not many answers. Mistral Large suffers from being a decent all-rounder in a competitive field. It does nothing better than everything else, and to top it all off, it’s incredibly pricey, coming in at near-GPT-4 levels. The only reason Mistral Large still has any relevance right now is because Llama 3’s context window is so horribly small (if I were rating that context window out of 10, I might give it a 3 or 4). So if you need a pretty smart model that can pick up on few-shot examples and has a good context window — and you aren’t afraid to pay for it — you can use Mistral Large. If you have a crapton of Mistral credits, go ahead and burn them on this model, it wouldn’t be the worst use. However, Mistral Large it writes worse than Command R + (and has less ctx), so it’s debatable whether Mistral Large is the best choice for a smart mid-range creative writer.

With prompting, Mistral Large is capable of even very difficult tasks, so it can function as a heavy hitter. But it already feels outdated, and its poorer example following and high prices severely limit where you’d want to use this thing. It’s not a bad model; but it would never be my first choice. Should it be yours?

Alright that’s all for this week. Thank you for reading, and also (for those of you who were here last week) for your feedback on the last post, it really helps to know what people find interesting! I know I’ve done two posts back to back on model evaluations, you guys seemed to enjoy the last post on Llama 3 but just like in synthetic data, variety’s important. I’ll increase my temperature a bit and pick a different subject next :)

Alright that’s all for this week, thanks for reading, have a good one and I’ll see you next time!

I like your post, thanks. I won't use this model however. The more I use LLama3 - it may be all I need for a while (or maybe command+r sometimes).