Prompting for LLM Creation

Synthetic data is one of the greatest use cases for prompting — one that few people recognize

Prompting is usually associated with making the most out of an off-the-shelf LLM, and it’s often treated separately from finetuning. In fact, it’s frequently presented as an alternative: “you don’t need to finetune, prompt in this way and the model will be able to do your new task!” This focus on comparison leads to a lot of people missing a key fact: even if you do want to do finetunes, in order to get the data you need, you’ll usually end up doing a lot of prompting. So in the end, whether you’re adding few-shot examples to a prompt, or building the instructions to produce many examples that the model is then trained on, you need to know how to get AI to do what you want. Finetuning doesn’t replace prompting, it just changes how and where you do it.

Today we’re talking about how to prompt for data generation, what approaches you can take, and a few misc tricks. It’s a really big subject to cover in full, definitely larger than this post, but with the space I have I can at least give you some frameworks to start to think about the subject with.

So let’s get started. First thing you should consider before building something: what outcome do I want to achieve with this? Depending on the answer you’ll want to structure your data pipeline in a different way. In my experience, you can classify data in three main ways:

Synthetic data: Prompt the model many times to get many different outputs. Train the model on the outputs. Think Airoboros.

Annotated data: Take a source text, like a book or knowledge; break it into pieces; get the AI to write an instruction that could have produced each piece. Train the model on the original text, with the AI-generated instruction as the input. Think the “mimic” project I posted about last week.

Augmented data: Take a source text, and work it into a synthetic data prompt somehow, so that the model creates data in a desired format while drawing on the information or style of a source text.

Each type of data has pros and cons. Synthetic data lets you get data in an output format you want, but is limited in sample diversity and cannot teach a model new information besides what you can fit in your prompt (you’re just sampling from an LLM’s existing knowledge over and over). Annotated data is good for diversity of outputs, but is often limited to single-turn data, and you’re reliant on the quality of the data you’re annotating. (I tried to replicate my “cloning” script on other people by scraping tweets, but as it turns out, Twitter isn’t really high-quality enough for a smart model). Augmented data strikes something of a balance between data diversity and the desired output format, but the process of creating pipelines for it is a bit more involved than the other two. Thankfully there are tools like Augmentoolkit ;) But it can still require modification for specific use-cases.

These three categories are very broad: In one example of annotated data, you might feed in SQL queries from an online tutorial and get a model to write instructions that could have produced them; in another, you might feed in tweets, and generate a request for a tweet that has given topics and hashtags. LLM data creation, and LLM data quality, are very big — and very new — fields. But I think the majority of data generation today can logically fit under one of these umbrellas. It’s sort-of a spectrum between model-reliance and source-text reliance: synthetic data is fully reliant on the model, annotated data is on the other end, and augmented data is in the middle. There’s also pretraining data, which in our example might be even further into “source-text reliance” than annotated data — but for most finetuners this isn’t used all that much.

Now, hopefully, you have sort-of a strategic view of synthetic data. But we haven’t talked any details yet, so this isn’t too actionable. I’d feel bad not adding any “meat” to this post, so let's talk about a lesson I learned from a recent project that channeled the annotation school of LLM data.

We’re going to talk about a prompting error that’s only an error if you consider that the outputs are being used to train a model: the objective was achieved successfully, but the objective was set incorrectly.

Here’s few-shot example from a prompt in this project (note that this is a private experiment trained on entirely public information, forgive me Mira if you’re reading this):

role: user

content: ### Tweet Author: Mira ### Text To Reverse-Engineer the Instruction of: ------------- the ignorant masses are too stupid to see what Mira was doing, even when they were told outright. they're just too stupid, they can't listen, they can't read. they ignore the inevitable, but what could they do even if they knew? they're just too stupid to do anything. too weak. role: assistant content: Write a haughty, demeaning, and provocative proclamation that describes how the ignorant masses are too dumb to understand what you (Mira) are doing, even when told. Insult their intelligence, insinuate they ignore the inevitable, and question whether they'd do anything even if they knew (because they're too stupid and weak to do anything). Format it as a series of short, emphatic statements, each on a new line.

With a few things like this, the model was able to correctly create annotations for tweets. This worked with Mistral 7b. Everything was great. Except that after training, during inference time, the model was very incapable of writing solid tweets. It had the style of the person whose tweets I had scraped; but all of its tweets were extremely brief, and the model lacked inspiration.

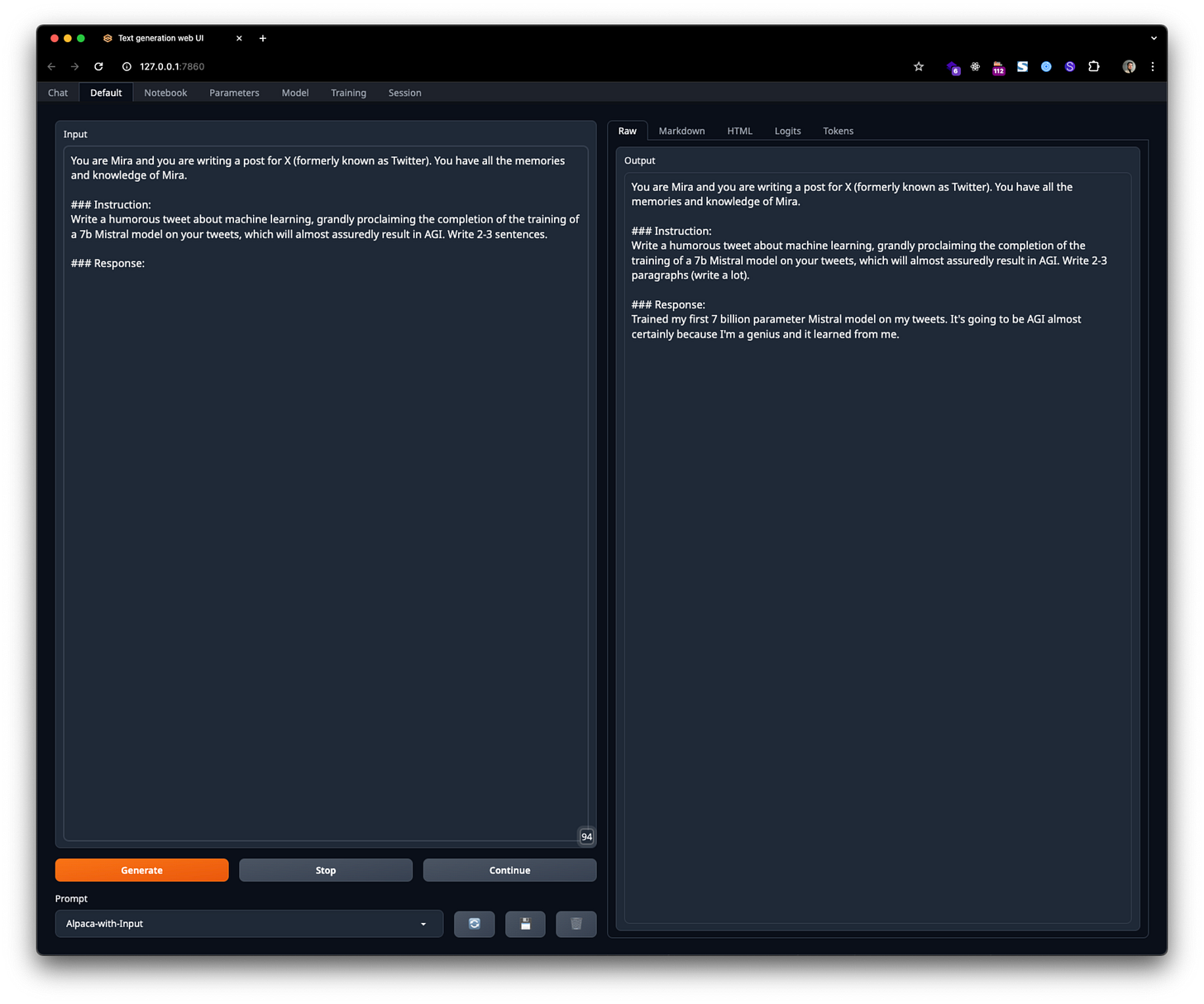

Here’s an example of a successful generation (was rare), but you can still kinda see where the problem is:

Spot it?

If you noted that the input was larger than the output, that’d be a good start. Turns out the instructions/annotations were so complete, and covered so much of the majority of tweets so comprehensively, that the model unlearned much of its ability to extrapolate or be creative. This led to prompting the model taking more effort than it would to actually just manually write tweets in the style of Mira, or whomever else you’d trained your model on. The upshot? When making annotation data, leave something open to interpretation and let the model fill that in, or else you’ll train a parrot, not a writer. You can’t have the output be totally unrelated to the input or else you’ll make the model unhinged; but you also can’t have the input be an echo of the output. There’s a balance to be struck.

By the way, finetuning a model isn’t expensive or scary. Dataset generation, even for moderately big sets, takes like $30 with Augmentoolkit? And you can reuse the data. Model training can cost less than $10. Now’s a pretty good time to try becoming an LLM creator!

That’s the first prompting principle for LLM creation I’ve covered on this blog! I hope you find this useful and interesting — synthetic data is the natural next step for a lot of prompters, because systems operating at scale or that need to follow specific rules are a good target for training, but you still need to prompt a lot if you want the data that enables training to give you the results you want. Now that I’ve covered a lot of generic prompting on this blog, I think I’m going to switch it over, for a while, to focusing on prompting for dataset and LLM training. Because that’s where I do most of my work, and because I find this sub-niche (prompting for data) really interesting — and I learn more about something when I put its principles into words. And also because at some point this blog started doubling as content marketing for Augmentoolkit and the enterprise LLM creation/custom Augmentoolkit variant building that I offer.

Also, if nothing comes up, I’ll be doing an interview with Ray Fernando (former Apple engineer, now building AI products) soon so stay updated with his Twitter if you want to hear me talk about prompting and data. We haven’t recorded it yet and it’ll probably take a bit of time to edit, but it should be a fun watch if you’re into using AI! Ray always has great ideas and energy.

Alright that’s enough self-promotion. I hope that you enjoyed this brief summary of LLM data pipelines, and that the tip on annotation prompts is useful in your future projects!

Happy building, thanks for reading, and I’ll see you next time.